2025-06-02

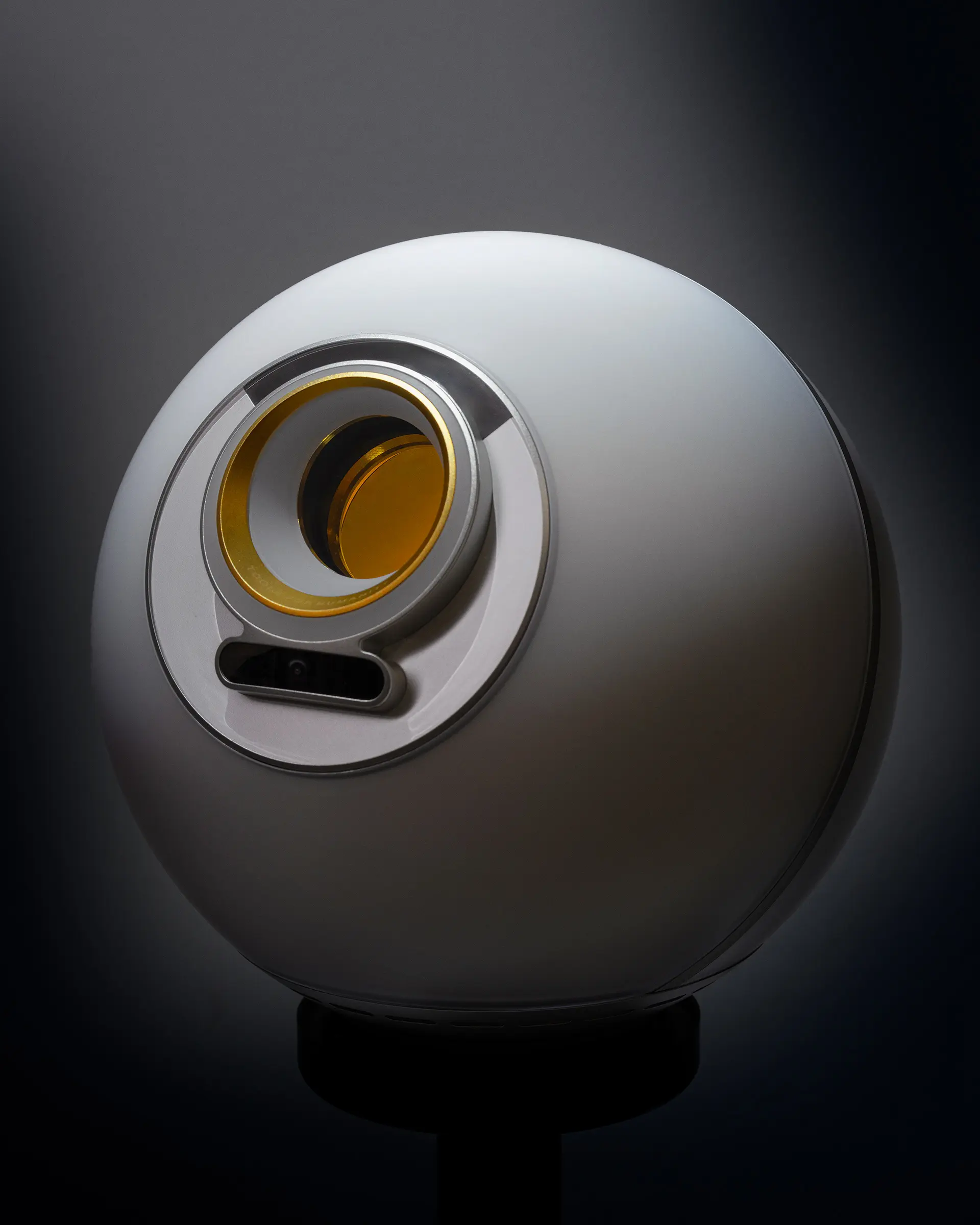

The Orb / Tools for humanity / Worldcoin in 5 min

“The Orb” is popping up in all sorts of places, most recently in Time magazine’s “The Orb Will See You Now”. It’s being heralded as everything from the one and final solution for privacy-protecting digital identity online, to a civil liberties nightmare, to an indispensable tool for preventing an AI takeover, to a quick way to make $42, and more.

But what it actually does and how it works is remarkably hard to find out. In a recent podcast, the team behind the project took more than two hours to explain it, and that was only the highlights with no detail. No wonder theories are all over the place.

I listened to that podcast, and I think I can explain it in 5 min. Read on, and we’ll start at the place where the value occurs: the Relying Party.

“Relying Party” is a geek term for a website or app into which you want to log in. So: all the value of the Orb and the app and networks and all of that is because users can log into websites or apps with this system (which doesn’t even have a good name. It’s not the Orb, which in the grand scheme of things is not important. It’s not the World Coin. Maybe it’s the World Network, but that name is so bland to be almost useless. I’m going to call it WN for this post.)

So you can log into a website or app with WN. That feature is of course not unique. You can log into websites or apps with other things, like usernames and passwords, or your Google or Facebook account or OpenID. But unlike other ways of logging in, if you log into a website or app with WN, the website or app is being guaranteed by all the tech that WN throws at it that a user who already has an account on the website or app they want to log in, cannot make a second account for the same website or app.

Have multiple Reddit accounts? Multiple Gmail addresses? Or multiple Amazon accounts? If those sites were to implement WN, you could not do that. Instead, those sites could be fairly certain that any new account created on their site was 1) created by a human and 2) each account was created by a different human. (Actually, the site can let you create multiple accounts, but only if it knows that all of them belong to you. You cannot create separate accounts pretending to be two distinct humans. So I lied, you could have multiple Reddit accounts, but you could not hide that fact from Reddit.)

I don’t want to talk about here why this may be a good or a bad idea. Only how this is done.

Secondly, if you log into two separate sites with WN, the two sites cannot compare notes and track you from one site to the other. Unlike with, say, logging in by e-mail address, the two sites cannot simply compare the e-mail addresses you gave them, and say, Oh, it’s the same Joe! Let’s show him underware ads on this site too, because he browsed underwear on the first site. That is impossible because he two sites are being given different identifiers. (Those seem to be public keys, so there is a different key pair per site.) This is nice from a privacy perspective, similar to what Kim Cameron popularized twenty years ago with directed identity.

The site-specific key pairs are being generated from the single most important piece of data in the system, which is your master public key, stored on a blockchain. This public blockchain acts as a registry for all the identities in the system, i.e. for all humans registered with WN – but before your freak out, it’s not as bad as it sounds, because all that the blockchain stores is a public key. There is no other information on that blockchain or elsewhere associated with that key, such as your name or blood type. It’s just a long basically random number.

So: the blockchain lists all the public keys of the people whose identity can be asserted by WN, and the technical machinery can derive relying-party-specific keypairs from any of those so that the relying party can be certain the user has been verified, but cannot tell which of the public key on the block chain, or which other keys used with other sites belong to the same user.

How does the key get onto that block chain? It appears there is a small set of trusted actors that have the credentials to put keys onto that blockchain, and that small set of trusted actors are the Orb stations WN has been setting up all over the world to get people registered. The actual keys being registered are generated on your mobile device, and only the public key is given to the system, the private one remains on your device only.

So the Orb only exists to make a decision whether or not a public key you’ve created on your mobile device may or may not be added to the registry of verified identities on that blockchain. That’s all. The key decision the Orb (and all of its Orb siblings in other locations) need to make is: have any of us registered that human before? If yes, do not add their new key to the blockchain. If no, let’s add it.

To determine whether or not WN has registered you before, the Orb (really just a fancy camera) takes a picture of your retina, figures out its unique characterics, breaks them into a gazillion data shards (which are entirely useless until you have brought all of them together – modern cryptography is often counter-intuitive) and distributes them so that it is hard to bring it back together. When somebody attempts to register again, the new attempted registration does the same thing, but the system rejects it (using some fancy multi-party computation distributed over 3 universities) because the previous shards are there already.

That’s it. (Plus a few add-on’s they have been building. Like verifying, using your smartphone’s camera, that it is indeed you operating the device when you are attempting to log into a site or app, so you can’t simply hand your public key to another person who otherwise could amass or buy thousands of other people’s identities and effectively create thousands of accounts on the same site, all under their control. Or the crypto currency that the relying party has to pay in order to get WN to verify an authentication attempt – that pays the sign-up bonus from for new users, plus for the operation of the network.)

My impression:

Lots of tech and smarts. I have not looked at the algorithms, but I can believe that this system can be made to work more or less as described. It has a clearly unique value proposition compared to the many other authentication / identity systems that are found in the wild.

My major remaining questions:

-

How does this system fail, and how would it be fixed if/when it does? You can be 100% certain of attacks, and 100% certain of very sophisticated attacks if they get substantial uptake. I have no good intuition about this, and I haven’t seen any substantive discussion about that either. (That would probably take a lot more than a two-hour podcast.) Given that one of their stated goal is that in the longer term no organization, including themselves, can take over the system, how would a rapid fix for a vulnerability even work?

-

And of course: will anybody adopt it? Tech history is full of failed novel authentication systems – the above quoted Kim’s CardSpace, in spite of being shipped with every copy of Windows, being a notable example. And there I have my doubts. As it is famously said, whatever your authentication or identity scheme, the first thing that a relying party asks for when implementing any of them is your e-mail address. If they continue to do that, the whole system would be largely pointless. But maybe there are some niche applications where this is different, I just haven’t seen many of them.

P.S. I’m sure I got some details wrong. Please correct me if you know better.

![[Logo]](https://reb00ted.org/assets/logo.png)